The Complete Guide to Creating a Self-Driving Car Within GTA

Let's Build a Self-Driving Car! Do you think components are a problem? In fact, all you need is your PC. Don't worry if you don't have an amazing graphics card because we will use a PC game from the early 2000s as our simulation environment. To be able to follow up with this project, you need to have some basic Python skills along with an understanding of machine learning, especially Convolutional Neural Networks (CNNs). So, without further ado, let's get started.

As you may have inferred from the image, we will be turning an outdated computer game (GTA Vice City) into a simulation environment for self-driving cars. We must find a way to train our model in order to accomplish this. There are two ways we can do this: either through supervised learning or reinforcement learning. We will be concentrating on the supervised learning approach in this project. To turn this video game, or any other, into a simulation environment for self-driving cars, we need to accomplish the following:

- Data Collection Method: In our case, data collection involves capturing labeled images while a human is driving a car to generate a dataset. Each image is labeled with the action that the human player took at a specific point in time.

- Machine Learning Model: In the second step, we will train a machine learning model using the data collected in the first step. We will implement the model using PyTorch. The model's architecture is based on Residual Neural Networks, commonly known as ResNets. Unlike conventional Convolutional Neural Networks (CNNs), ResNets feature residual connections between convolutional layers. I'll explain later why this is a better design choice.

-

Training Platform: 🚀 For training the model, you can utilize your PC if it's equipped with a decent GPU. However, you'll need to follow the installation process for CUDA or Compute Unified Device Architecture drivers to make PyTorch GPU-compatible. If you don't have access to a GPU, it's not recommended to attempt local training.

The solution I recommend is to use Google Colab, which provides a Jupyter Notebooks interface and comes with all the necessary tools pre-installed. Additionally, you can seamlessly store your data in Google Drive for a more convenient and GPU-accelerated training experience. 📦💻😊.

-

Deployment Platform for Your Code: Finally, we'll create a piece of code that captures an image from the game, performs inference using the model, and takes action to influence the state of the environment. This setup is known as a closed-loop control system because the state of the game affects the next image that will be captured🎮🤖.

.

Data Collection

Please find my complete code for collecting the training data here . Lets brake down this code a lettle bit, first, I used pynput for keyboard events, mss for capturing the screen, and OpenCV for manipulating the images.

def on_press(key):

try:

if (key.char == 'o'):

global forward_img_counter

forward_img_counter += 1

screen = grab_screen()

img_dir = path + 'forward/forward.' + str(forward_img_counter) + '.jpg'

cv2.imwrite(img_dir, screen)

Examining this block of code will reveal that its purpose is to take a picture each time a player interacts with the game by clicking on a particular key. The idea behind this is that every key in the game environment has a specific function. After that, the photographed is taken and stored in a directory specifically for that operation. Alternatively, you could store all of the images in one directory using CSV files, but I went with a more straightforward method for clarity's sake.

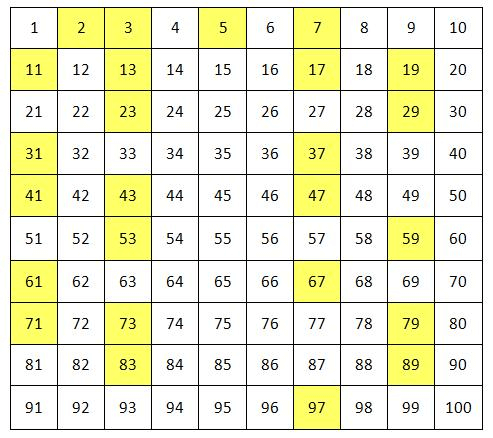

We will also be using a classification model, so it's imperative to keep track of the number of images in each class. To effectively train the model, it is imperative to maintain an even distribution of images across classes.

Our approach is to run the code in the background while you're playing the video game. I recommend using the same car during both the data generation and testing phases. By doing this, you can guarantee data consistency and lower the possibility that your machine learning model won't be able to recognize the relationship between the actions and input images. While it's possible to train a model to drive every car in the game, doing so would necessitate the collection of additional data. I collected about 80,000 images from my training set using the same car.

The Machine Learning Model

Our machine's learning model's mession is to map images from the environment into one of of 4 actions, in another words it classifyes the images into the prober action that should be taken every time. In this project I will be using ResNets instate of conventional CNNs since resnets has shown to be faster in training and result in more accurate model overall you can read more in the ResNets paper the following code is how to realize the ResNets block using PyTorch.

class ResNet(nn.Module) :

def __init__(self , in_channels , out_channels):

super(ResNet , self).__init__()

self.conv1 = nn.Conv2d(in_channels , out_channels - in_channels,

kernel_size = 3 , stride = 1 , padding = 1)

self.n1 = nn.InstanceNorm2d(out_channels - in_channels, affine=True)

self.conv2 = nn.Conv2d(out_channels - in_channels, out_channels - in_channels,

kernel_size = 3 , stride = 2 , padding = 1)

self.n2 = nn.InstanceNorm2d(out_channels - in_channels, affine=True)

self.mish = nn.Mish(inplace=True)

def forward(self , x):

identity = x

x = self.mish(self.n1(self.conv1(x)))

x = self.n2(self.conv2(x))

x = torch.cat((x,f.max_pool2d(identity,(2,2))),1)

return self.mish(x)

You can fined the complete implementation of the model here.

Train Your Model

The next step is to train the model using Google Colab. Start by compressing your data and uploading it to your Google Drive. Then, connect your Colab runtime to your Google Drive and access the data. When it comes to training, use the cross-entropy loss function with weights calculated based on the number of images in each class. You must take this action to stop your model from overfitting.

Here again is the link for complete code.

Deploy Your Model

The approach used here is very similar to the code responisble of data collection using the same modules to capture images from the screen and generating a keyboard key event. the difference between this piece of code and the previous one is that it runs an infrenec on the trained model.

frame = torch.tensor(frame , dtype = torch.float32).view(1,

3,Image_width,Image_hight) #prepare the image frame

raw_output = self.pilot_model(frame)#run inference on the model

output = torch.argmax(raw_output)#next action selected by the model

if output == 0 :

pyautogui.keyDown('o')

t.sleep(self.loop_delay)

pyautogui.keyUp('o')

print('foward')

This code is running inside a while loop. The purpose of the loop delay is to ensure that the virtual keypresses from the keyboard are properly recognized by your system.

Results 🚗💨

The following video showcases how the model we've built and trained in this tutorial will hopefully drive. At this point, congratulations! 🎉 You've created your very own self-driving car in the metaverse. 🌟🚀😂 Now you can consider starting your own startup and watch the money roll in! 💰💵💰😂😂.

The Complete Guide to Creating a Self-Driving Car Within GTA

Thankyou for following up to the end, please feel free to see the research paper of this project.